Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

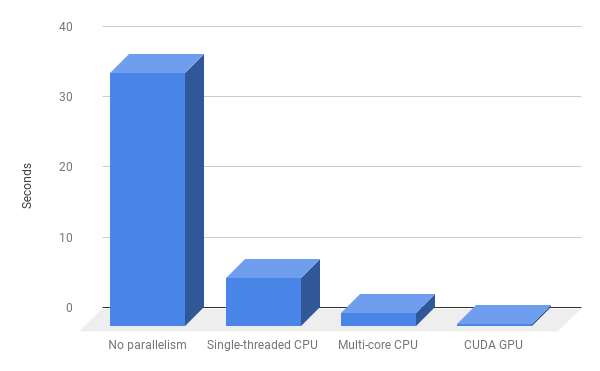

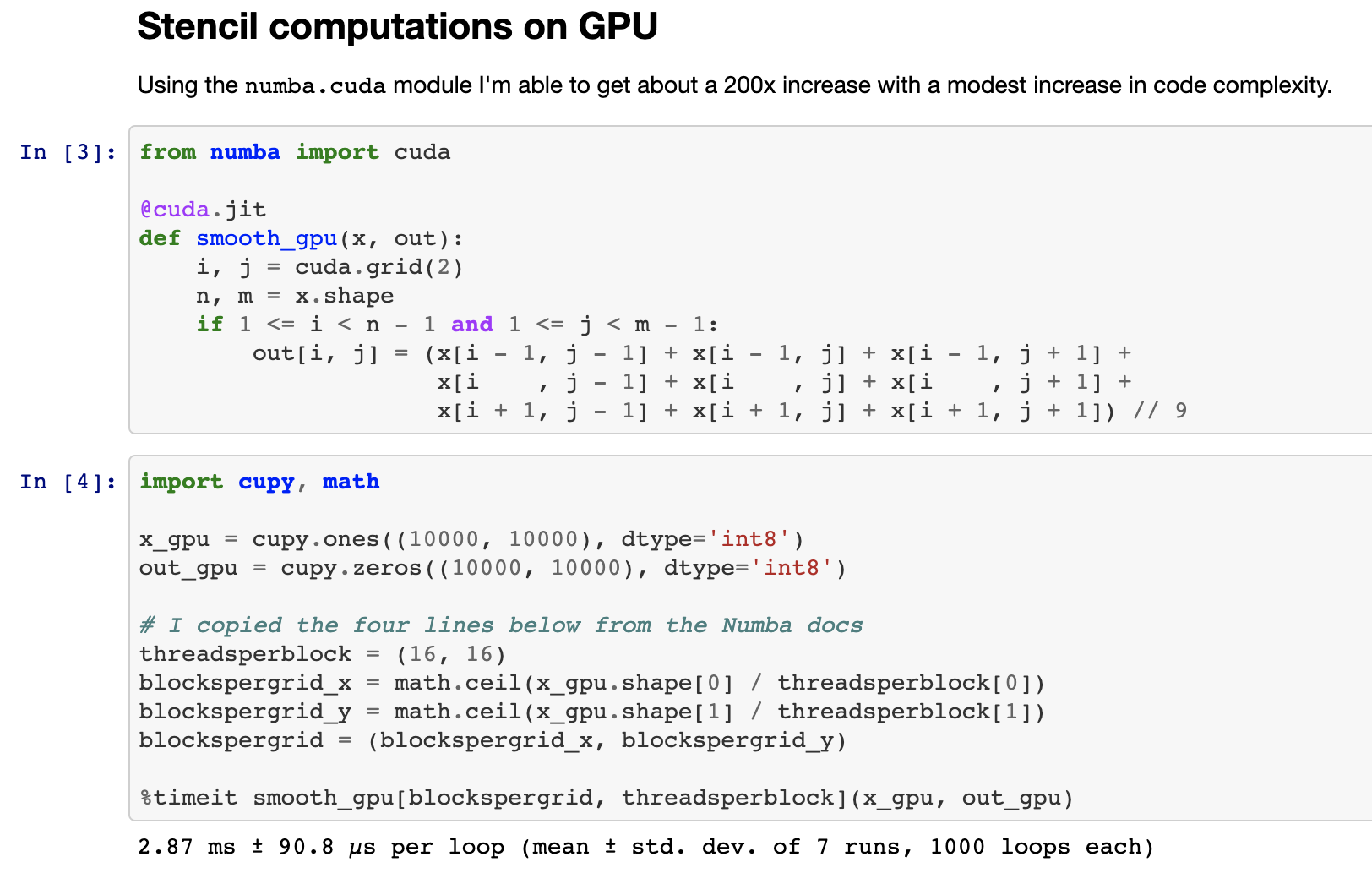

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

machine learning - How to make custom code in python utilize GPU while using Pytorch tensors and matrice functions - Stack Overflow

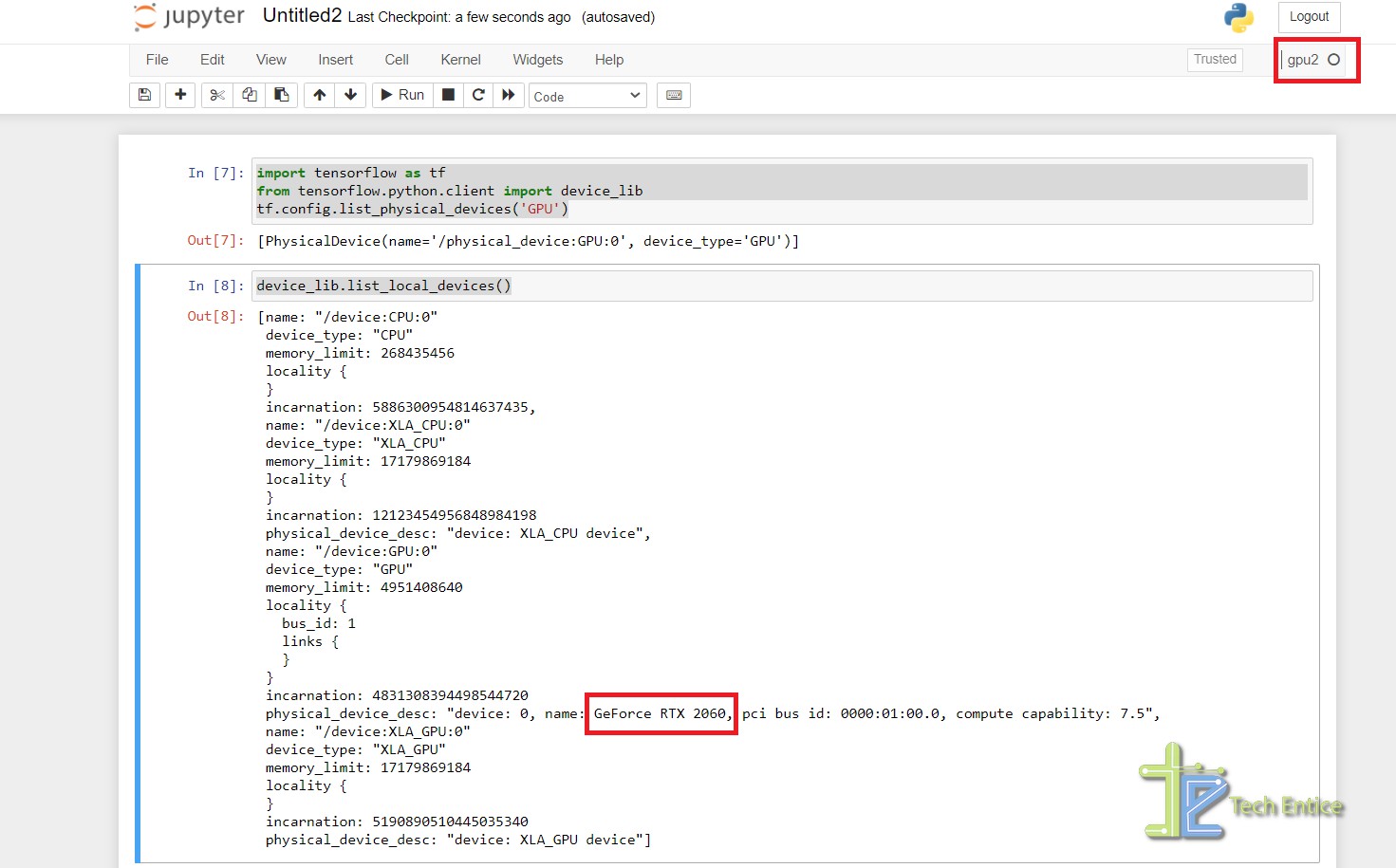

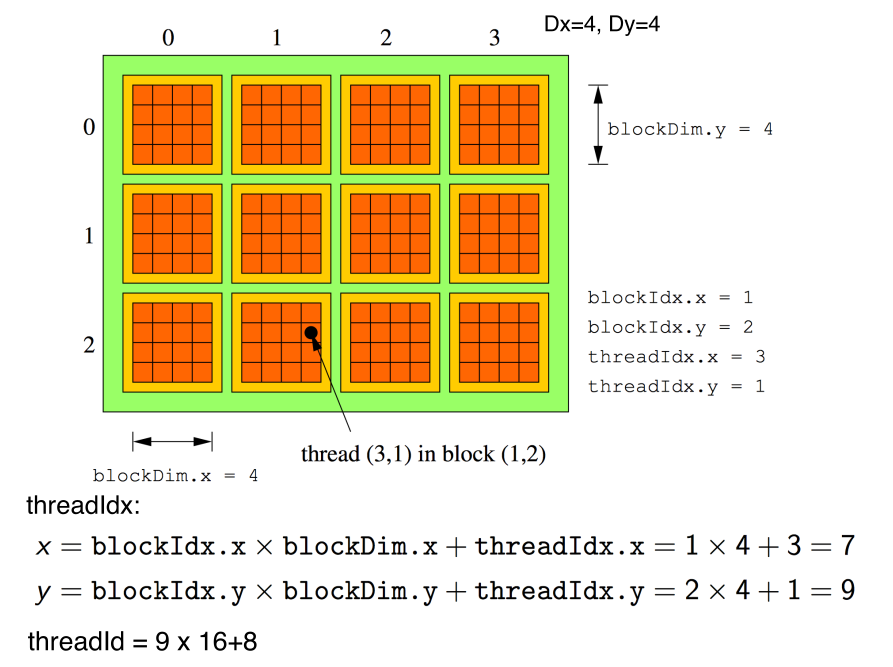

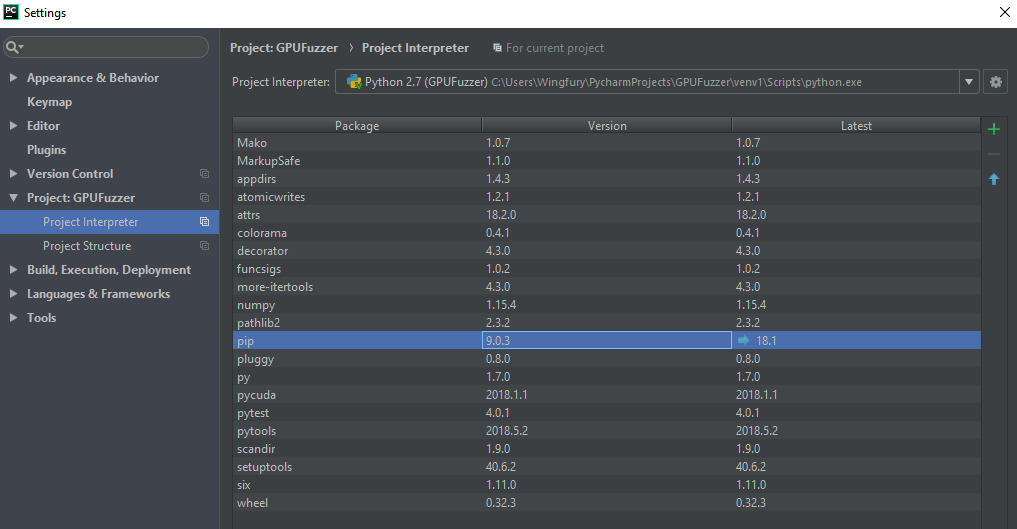

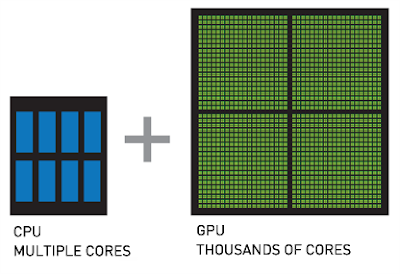

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

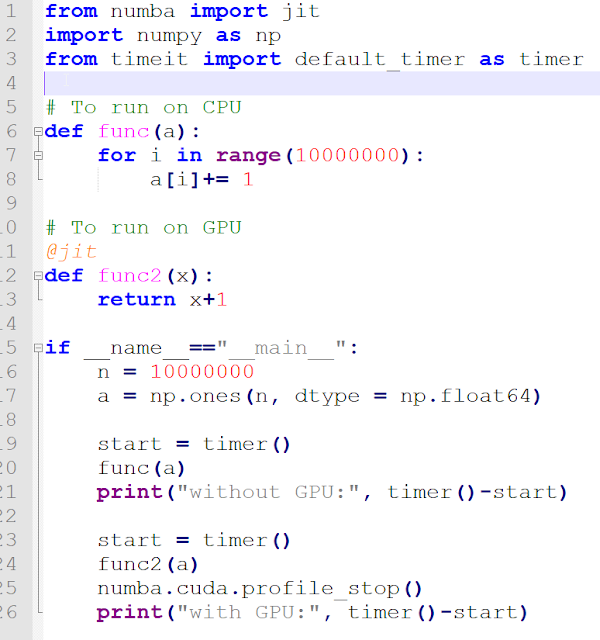

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium